大家好,欢迎来到IT知识分享网。

将文件中的内容,发送到网络中,一般的步骤是:1.调用read,将文件内容读取到用户缓冲区;2.调用send(或write)将用户缓冲区的内容发送出去。

涉及的数据拷贝有:硬盘 –> 内核文件缓存 –> 用户缓冲区 –> 内核网络缓存。

而sendfile,数据拷贝变成:硬盘 –> 内核文件缓存 –> 内核网络缓存。

可见用sendfile,减少了一次数据拷贝,同时减少了两次内核空间与用户空间的切换,从而提高了数据发送的效率。

首先看下总体的调用流程:

在splice_direct_to_actor函数中,有个循环。 在循环中,调用do_splice_to将数据拷贝到内核文件缓冲区;调用actor(direct_splice_actor)将数据拷贝到内核网络缓冲区。

一 从硬盘拷贝到内核文件缓冲区

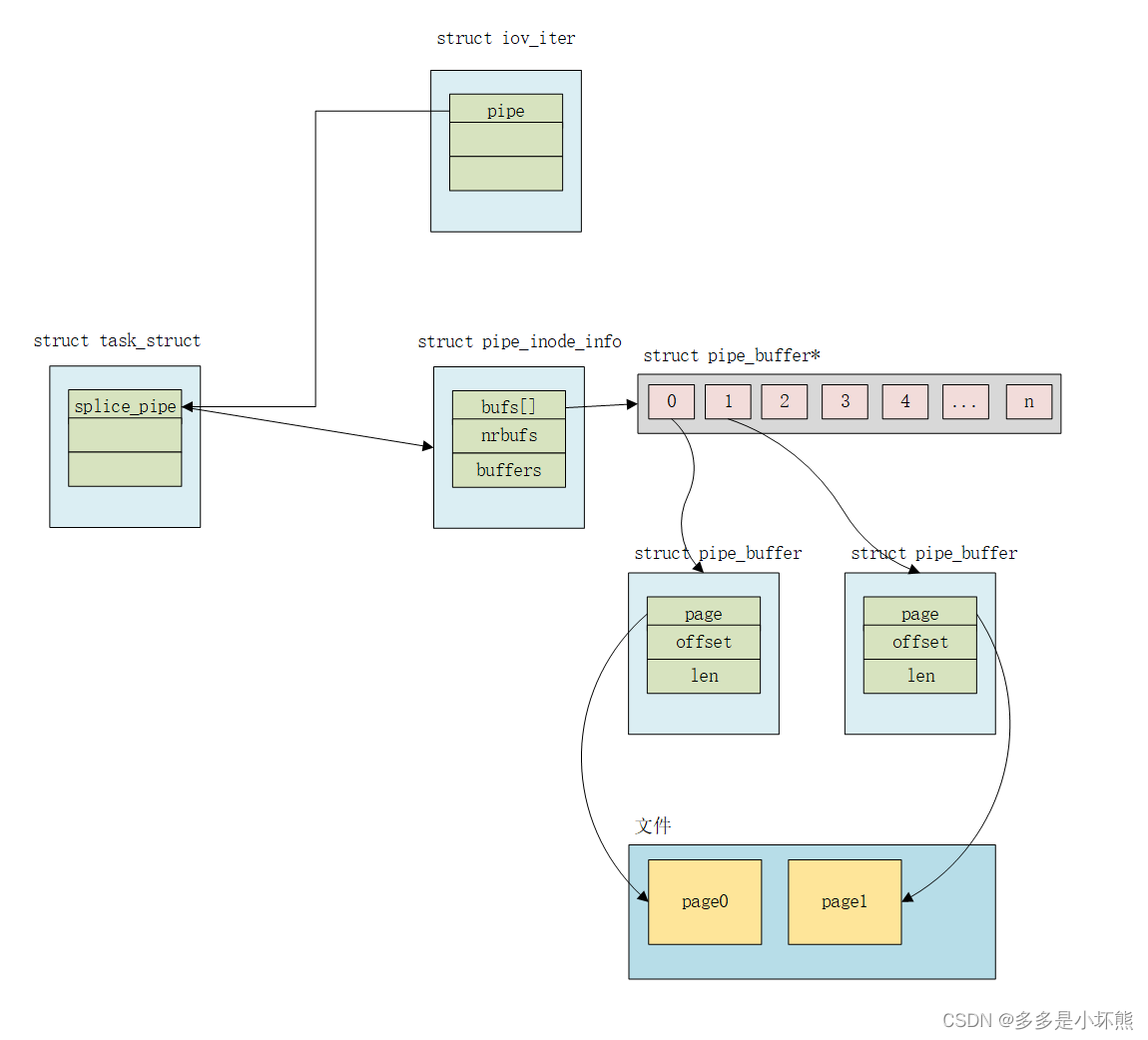

数据从硬盘拷贝到内核文件缓存,涉及的两个数据结构如下:

struct pipe_inode_info {

struct mutex mutex;

wait_queue_head_t wait; 管道/FIFO等待队列

unsigned int nrbufs, curbuf, buffers;

unsigned int readers; 读进程的标志

unsigned int writers; 写进程的标志

unsigned int files;

unsigned int waiting_writers; 在等待队列中睡眠的写进程的个数

unsigned int r_counter; 与readers类似,但当等待读取FIFO的进程时使用

unsigned int w_counter; 与writers类似,但当等待写入FIFO的进程时使用

struct page *tmp_page;

struct fasync_struct *fasync_readers; 用于通过信息进号的异步I/O通知

struct fasync_struct *fasync_writers; 用于通过信息进号的异步I/O通知

struct pipe_buffer *bufs; 管道缓存区描述符

struct user_struct *user;

};

struct pipe_buffer {

struct page *page; 管道缓冲区页框的描述符地址

offset – 页框内有效数据的当前位置

len – 页框内有效数据的长度

unsigned int offset, len;

const struct pipe_buf_operations *ops; 管道缓冲区方法表的地址

unsigned int flags;

unsigned long private;

};

struct pipe_inode_info结构中有个struct pipe_buffer *bufs成员,bufs指向的是struct pipe_buffer类型的数组。struct pipe_buffer中的page指向一个页框(struct page类型),页框保存的是从硬盘中读取的文件的内容。可以参考上面的图片,对数据存储结构有个大体的了解。

从硬盘读取数据到页框中的代码:

static ssize_t generic_file_buffered_read(struct kiocb *iocb, struct iov_iter *iter, ssize_t written) { struct file *filp = iocb->ki_filp; struct address_space *mapping = filp->f_mapping; // 获取文件对应的address_space对象 struct inode *inode = mapping->host; // 获取地址空间对象的所有者,即索引节点对象 struct file_ra_state *ra = &filp->f_ra; loff_t *ppos = &iocb->ki_pos; // 正在进行I/O操作的当前文件位置 pgoff_t index; // 第一个请求字节所在页的逻辑号 pgoff_t last_index; // 最后一个请求字节所在的页逻辑号 pgoff_t prev_index; unsigned long offset; /* offset into pagecache page */ // 第一个字节在页内的偏移量 unsigned int prev_offset; int error = 0; // 超过文件大小限制 if (unlikely(*ppos >= inode->i_sb->s_maxbytes)) return 0; iov_iter_truncate(iter, inode->i_sb->s_maxbytes); index = *ppos >> PAGE_SHIFT; //当前逻辑页 prev_index = ra->prev_pos >> PAGE_SHIFT; prev_offset = ra->prev_pos & (PAGE_SIZE-1); last_index = (*ppos + iter->count + PAGE_SIZE-1) >> PAGE_SHIFT; // 读取的最后一个逻辑页 offset = *ppos & ~PAGE_MASK; // 文件当前指针在页内的偏移 for (;;) { struct page *page; pgoff_t end_index; loff_t isize; unsigned long nr, ret; // 检查当前进程的标志TIF_NEED_RESCHED,如果该标志置位,调用schedule cond_resched(); find_page: if (fatal_signal_pending(current)) { error = -EINTR; goto out; } // 查找页高速缓存以找到包含所请求数据的页描述符 page = find_get_page(mapping, index); if (!page) { if (iocb->ki_flags & IOCB_NOWAIT) goto would_block; page_cache_sync_readahead(mapping, ra, filp, index, last_index - index); page = find_get_page(mapping, index); if (unlikely(page == NULL)) goto no_cached_page; } page_ok: /* * i_size must be checked after we know the page is Uptodate. * * Checking i_size after the check allows us to calculate * the correct value for "nr", which means the zero-filled * part of the page is not copied back to userspace (unless * another truncate extends the file - this is desired though). */ // 文件大小 isize = i_size_read(inode); // 最后一页的页索引 end_index = (isize - 1) >> PAGE_SHIFT; // index超出文件包含的页数 if (unlikely(!isize || index > end_index)) { put_page(page); goto out; } /* nr is the maximum number of bytes to copy from this page */ nr = PAGE_SIZE; // 需要读取的字节数 if (index == end_index) { // 最后一页 nr = ((isize - 1) & ~PAGE_MASK) + 1; if (nr <= offset) { put_page(page); goto out; } } nr = nr - offset; /* If users can be writing to this page using arbitrary * virtual addresses, take care about potential aliasing * before reading the page on the kernel side. */ if (mapping_writably_mapped(mapping)) flush_dcache_page(page); /* * When a sequential read accesses a page several times, * only mark it as accessed the first time. */ if (prev_index != index || offset != prev_offset) // 将标志PG_referenced或PG_active置位,表示该页正在被访问不应该被换出 mark_page_accessed(page); prev_index = index; /* * Ok, we have the page, and it's up-to-date, so * now we can copy it to user space... */ // 将数据拷贝到iter ret = copy_page_to_iter(page, offset, nr, iter); offset += ret; index += offset >> PAGE_SHIFT; offset &= ~PAGE_MASK; prev_offset = offset; // 减少页描述符的引用计数 put_page(page); written += ret; if (!iov_iter_count(iter)) goto out; if (ret < nr) { error = -EFAULT; goto out; } continue; out: // 更新预读数据结构ra ra->prev_pos = prev_index; ra->prev_pos <<= PAGE_SHIFT; ra->prev_pos |= prev_offset; // 更新文件指针 *ppos = ((loff_t)index << PAGE_SHIFT) + offset; // 更新文件访问时间 file_accessed(filp); return written ? written : error; }将页框与pipe_buffer关联的部分代码:

static size_t copy_page_to_iter_pipe(struct page *page, size_t offset, size_t bytes, struct iov_iter *i) { struct pipe_inode_info *pipe = i->pipe; struct pipe_buffer *buf; size_t off; int idx; if (unlikely(bytes > i->count)) bytes = i->count; if (unlikely(!bytes)) return 0; if (!sanity(i)) return 0; off = i->iov_offset; idx = i->idx; buf = &pipe->bufs[idx]; if (off) { if (offset == off && buf->page == page) { /* merge with the last one */ buf->len += bytes; i->iov_offset += bytes; goto out; } idx = next_idx(idx, pipe); // 更新idx buf = &pipe->bufs[idx]; } if (idx == pipe->curbuf && pipe->nrbufs) // 缓冲区已满 return 0; pipe->nrbufs++; buf->ops = &page_cache_pipe_buf_ops; get_page(buf->page = page); // 使buf->page指向page buf->offset = offset; buf->len = bytes; i->iov_offset = offset + bytes; i->idx = idx; out: i->count -= bytes; return bytes; }二 从内核文件缓冲区拷贝到内核网络缓冲区

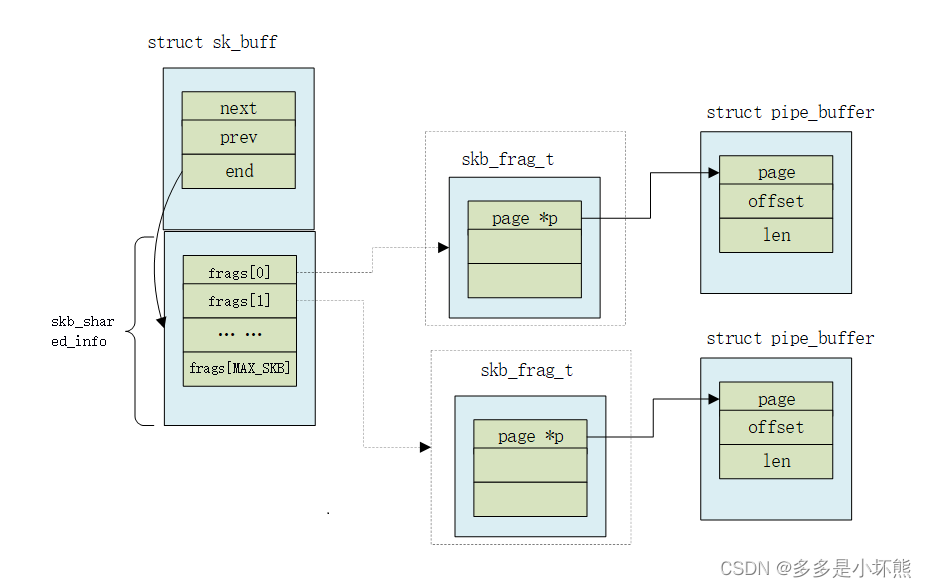

首先贴下内核网络层几个数据的关联:

从文件中读取的部分数据,已经保存在pipe->bufs指向的数组中,splice_from_pipe_feed则遍历此数组。

static int splice_from_pipe_feed(struct pipe_inode_info *pipe, struct splice_desc *sd, splice_actor *actor) { int ret; while (pipe->nrbufs) { // 缓冲区包含有效数据的pipe_buffer的数量 struct pipe_buffer *buf = pipe->bufs + pipe->curbuf; sd->len = buf->len; if (sd->len > sd->total_len) sd->len = sd->total_len; ret = pipe_buf_confirm(pipe, buf); if (unlikely(ret)) { if (ret == -ENODATA) ret = 0; return ret; } ret = actor(pipe, buf, sd); // pipe_to_sendpage if (ret <= 0) return ret; buf->offset += ret; buf->len -= ret; sd->num_spliced += ret; sd->len -= ret; sd->pos += ret; sd->total_len -= ret; if (!buf->len) { pipe_buf_release(pipe, buf); pipe->curbuf = (pipe->curbuf + 1) & (pipe->buffers - 1); // 获取下一个缓存区索引 pipe->nrbufs--; if (pipe->files) sd->need_wakeup = true; } if (!sd->total_len) return 0; } return 1; }在pipe_to_sendpage中获取套机字对应的file

struct file *file = sd->u.file;

return file->f_op->sendpage(file, buf->page, buf->offset,

sd->len, &pos, more);

下面提到的页框page,即buf->page。

在sock_sendpage中获取套接字对应的socket

struct socket *sock;

int flags;

sock = file->private_data;

在inet_sendpage中获取对应的sock

struct sock *sk = sock->sk;

在do_tcp_sendpages中:

申请skb

skb = sk_stream_alloc_skb(sk, 0, sk->sk_allocation,

tcp_rtx_and_write_queues_empty(sk));

将skb添加到sk->sk_write_queue链表上

skb_entail(sk, skb);

获取空闲的frags的索引

i = skb_shinfo(skb)->nr_frags;

将page与skb进行关联:

static inline void __skb_fill_page_desc(struct sk_buff *skb, int i, struct page *page, int off, int size) { skb_frag_t *frag = &skb_shinfo(skb)->frags[i]; /* * Propagate page pfmemalloc to the skb if we can. The problem is * that not all callers have unique ownership of the page but rely * on page_is_pfmemalloc doing the right thing(tm). */ frag->page.p = page; frag->page_offset = off; skb_frag_size_set(frag, size); page = compound_head(page); if (page_is_pfmemalloc(page)) skb->pfmemalloc = true; }免责声明:本站所有文章内容,图片,视频等均是来源于用户投稿和互联网及文摘转载整编而成,不代表本站观点,不承担相关法律责任。其著作权各归其原作者或其出版社所有。如发现本站有涉嫌抄袭侵权/违法违规的内容,侵犯到您的权益,请在线联系站长,一经查实,本站将立刻删除。 本文来自网络,若有侵权,请联系删除,如若转载,请注明出处:https://haidsoft.com/133158.html